How to apply face recognition API technology to data journalism with R and python

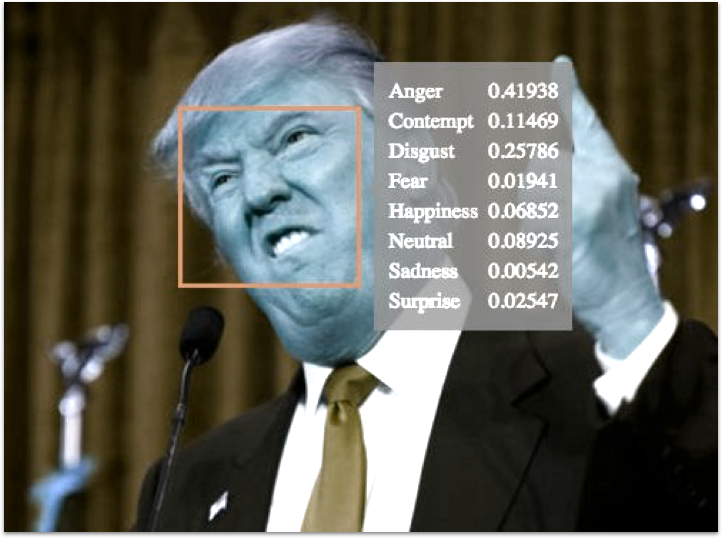

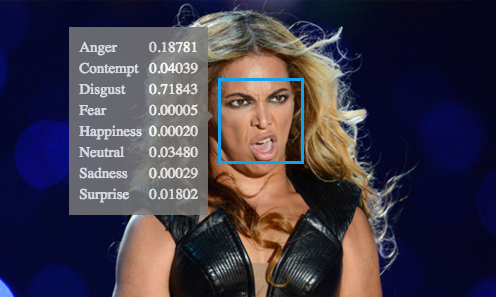

The Microsoft Emotion API is based on state of the art research from Microsoft Research in computer vision and is based on a Deep Convolutional Neural Network model trained to classify the facial expressions of people in videos and images. This is an attempt to explain how to apply the API for data-driven reporting.

Let’s be honest, the last and final debate was depressing. The negativity, the personal allegations, and Trump’s Belzebub-like facial expressions made it difficult to stay up to 3:30am and watch this combat with my American wife, which resembled an old feisty couple close to divorce. However, the debate was a gold mine for computer assisted reporting. One of the APIs I recently stumbled across when talking to the research lab from Microsoft is a neat emotion video API. It is a facial recognition software API based on a deep convolutional Neural Network model trained to classify the facial expressions of people in videos and images.

The team at Microsoft promises that users receive a confidence score across the “universal emotions” based on the associations between facial expressions and emotions identified from years of psychology literature. According to Anna Roth at Microsoft, the model is trained on tens of thousands of images labeled with the universal expressions.

The Debates are a goldmine for emotion research

One article talked about the best way to experience the extremes of this years presidential debate rally, which is to turn off the sound. The last three presidential debates, besides being difficult to watch, offered me a new unique opportunities to apply facial recognition technology to journalism. We will take a few clips and perform a simple analysis on facial expressions in the context of spoken words. The analysis served also as a bases for an article we ran at the Economist Newspaper.

Python setup:

We will run the API on a video clip for the third debate, and use a sample of the last 5 minutes for this analysis. It serves us as a sample, and we hopefully will get a good taste for the two candidate’s facial expressions. You can set up a free API key here.

import httplib

import urllib

import base64

import json

import pandas as pd

import numpy as np

import requests

# you have to sign up for an API key, which has some allowances. Check the API documentation for further details:

_url = 'https://api.projectoxford.ai/emotion/v1.0/recognizeInVideo'

_key = 'insert your key here' #Here you have to paste your primary key

_maxNumRetries = 10

The Python 2 setup requires us to load in a few libraries. Next we are calling the response for the video url we want to receive the analysis for.

# URL direction: I hosted this on my domain

urlVideo = 'http://datacandy.co.uk/blog2.mp4'

# Computer Vision parameters

paramsPost = { 'outputStyle' : 'perFrame'}

headersPost = dict()

headersPost['Ocp-Apim-Subscription-Key'] = _key

headersPost['Content-Type'] = 'application/json'

jsonPost = { 'url': urlVideo }

responsePost = requests.request( 'post', _url, json = jsonPost, data = None, headers = headersPost, params = paramsPost )

if responsePost.status_code == 202: # everything went well!

videoIDLocation = responsePost.headers['Operation-Location']

print videoIDLocation

Next we harvest the response, after another cup of coffee (we need to wait a bit for the response).

## Wait a bit, it's processing

headersGet = dict()

headersGet['Ocp-Apim-Subscription-Key'] = _key

jsonGet = {}

paramsGet = urllib.urlencode({})

getResponse = requests.request( 'get', videoIDLocation, json = jsonGet,\

data = None, headers = headersGet, params = paramsGet )

rawData = json.loads(json.loads(getResponse.text)['processingResult'])

timeScale = rawData['timescale']

frameRate = rawData['framerate']

emotionPerFramePerFace = {}

currFrameNum = 0

for currFragment in rawData['fragments']:

for currEvent in currFragment['events']:

emotionPerFramePerFace[currFrameNum] = currEvent

currFrameNum += 1

# Data collection

person1, person2 = [], []

for frame_no, v in emotionPerFramePerFace.copy().items():

for i, minidict in enumerate(v):

for k, v in minidict['scores'].items():

minidict[k] = v

minidict['frame'] = frame_no

if i == 0:

person1.append(minidict)

else:

person2.append(minidict)

df1 = pd.DataFrame(person1)

df2 = pd.DataFrame(person2)

del df1['scores']

del df2['scores']

# Saving in pd data-frame format:

df1.to_csv("/your/file/path/trump.csv", index=False)

df2.to_csv("/your/file/path/clinton.csv", index=False)

Finally, we need to save the data in a format that allows us to perform the analysis on the resulting data. We will do this via a CSV file, and continue our journey in R.

R setup

Lets tickle out the good bits with the following script:

########## Trump's face

blog_trump <- read.csv("/your/file/path/trump.csv", header = T)

View(trump_g)

trump_g <- blog_trump %>%

gather(key, value, c(anger, contempt, disgust, fear, happiness, neutral, sadness, surprise)) %>%

filter(!key == "neutral") %>%

filter(id == 0) %>%

mutate(candidate = "Trump")

########## Clinton's face

blog_clinton <- read.csv("/your/file/path/clinton.csv", header = T)

clinton_g <- blog_clinton %>%

gather(key, value, c(anger, contempt, disgust, fear, happiness, neutral, sadness, surprise)) %>%

filter(!key == "neutral") %>%

filter(id == 1) %>%

mutate(candidate = "Clinton")

# Merge them

all <- rbind(clinton_g, trump_g)

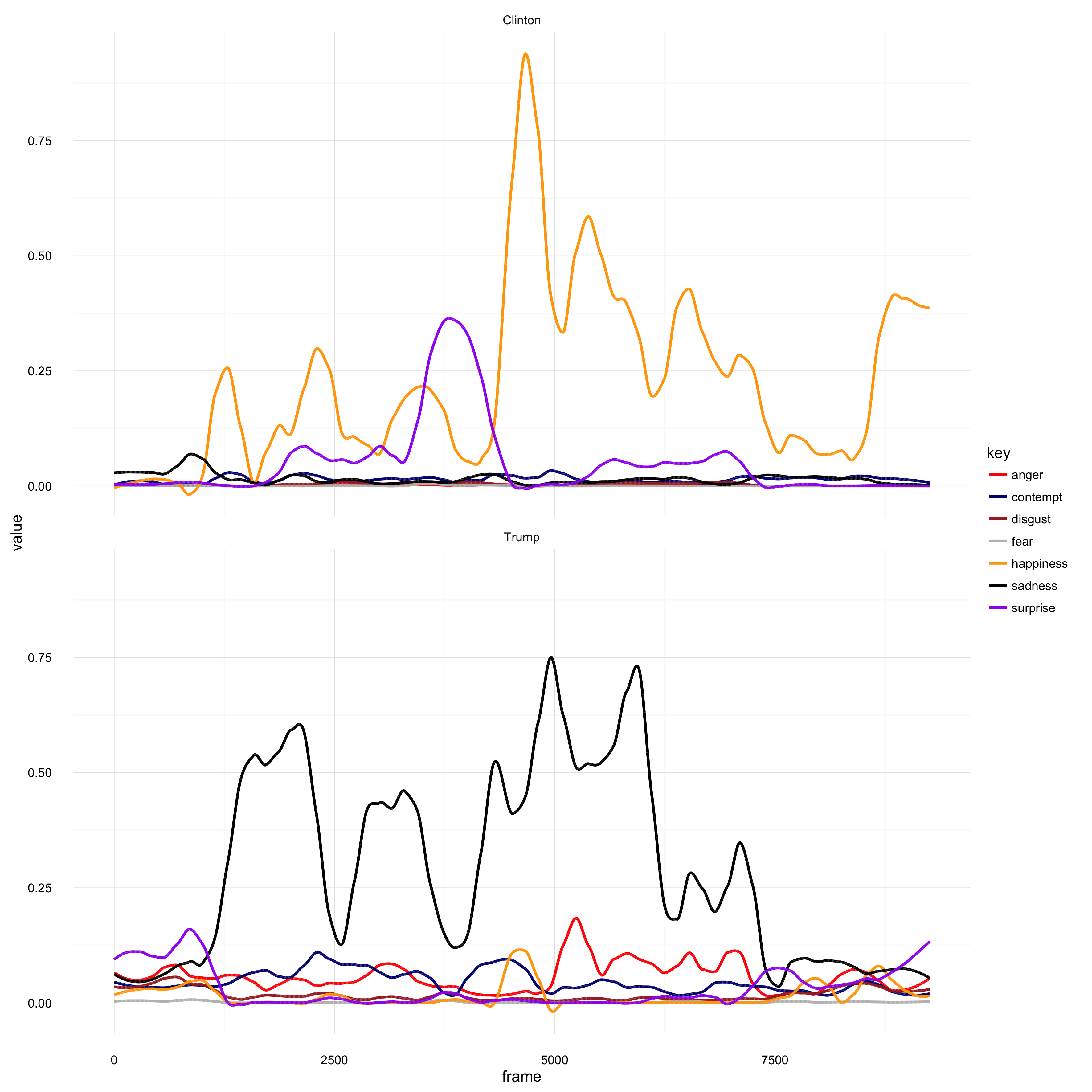

Let’s visualize the data from above over time, via the measure of picture frames:

# Smooth line chart

ggplot(all, aes(frame, value, group = key, col = key)) +

# geom_line() + would display all the non-smoothed lines

geom_smooth(method = "loess", n = 100000, se = F, span = 0.1) +

facet_wrap(~ candidate, ncol = 1) + theme_minimal()

Here’s the video we pulled out facial recognition data:

And when you run the script above, a clean facet chart should appear for smoothed (assumed) facial emotions for both speakers, over the time interval of the last 5 minutes of the 3rd debate.

Mr. Trump’s upset face was a sight many viewers got so used to over the previous debates, that it was no surprise for us to see him angry in the 3rd and final debate. Was this a strategic move, to intimidate his opponent and to pull up his chances to win credibility?

Mark G. Frank, Professor and Chair of the Department of Communication at the State University of New York told me that most likely it is just his personal trait. What’s important for the interpretation of facial recognition data is the underlying context of spoken words or a reaction to what someone else has said. If the facial emotion measurement doesn’t match up with the sentiment in the speaker’s language, there is something wrong and worth to further investigate.

There are occasions for both candidates when fake emotional expressions played an important role in their strategies. Trump always got what he wanted. In one of Trump’s book, “The Art of the Deal” reveals that in second grade he gave a teacher a black eye. Today he uses his brain instead of his fists, says it in the book. Are his angry and upset expression be a relict from his past when he got whatever he desired?

Facial recognition data, as the one that we collected via the Microsoft emotion API, suggests that when Trump is challenged with arguments which he doesn’t like, his facial attitude changes. In the second debate, Clinton provoked him with mentioning his involvement in degrading a former Miss Universe. His expression promptly changed to a mixture of an angry and sad expression.

On the contrary, there are general sequences when he seems to keep his angry and sad face as a poker face. Frank calls for caution. When Trump pushes his lips together, his lip corners naturally go down. A facial recognition software is not necessarily smart and might pick up on muscle movements around Trumps corners. The software could incorrectly interpret it as sadness.

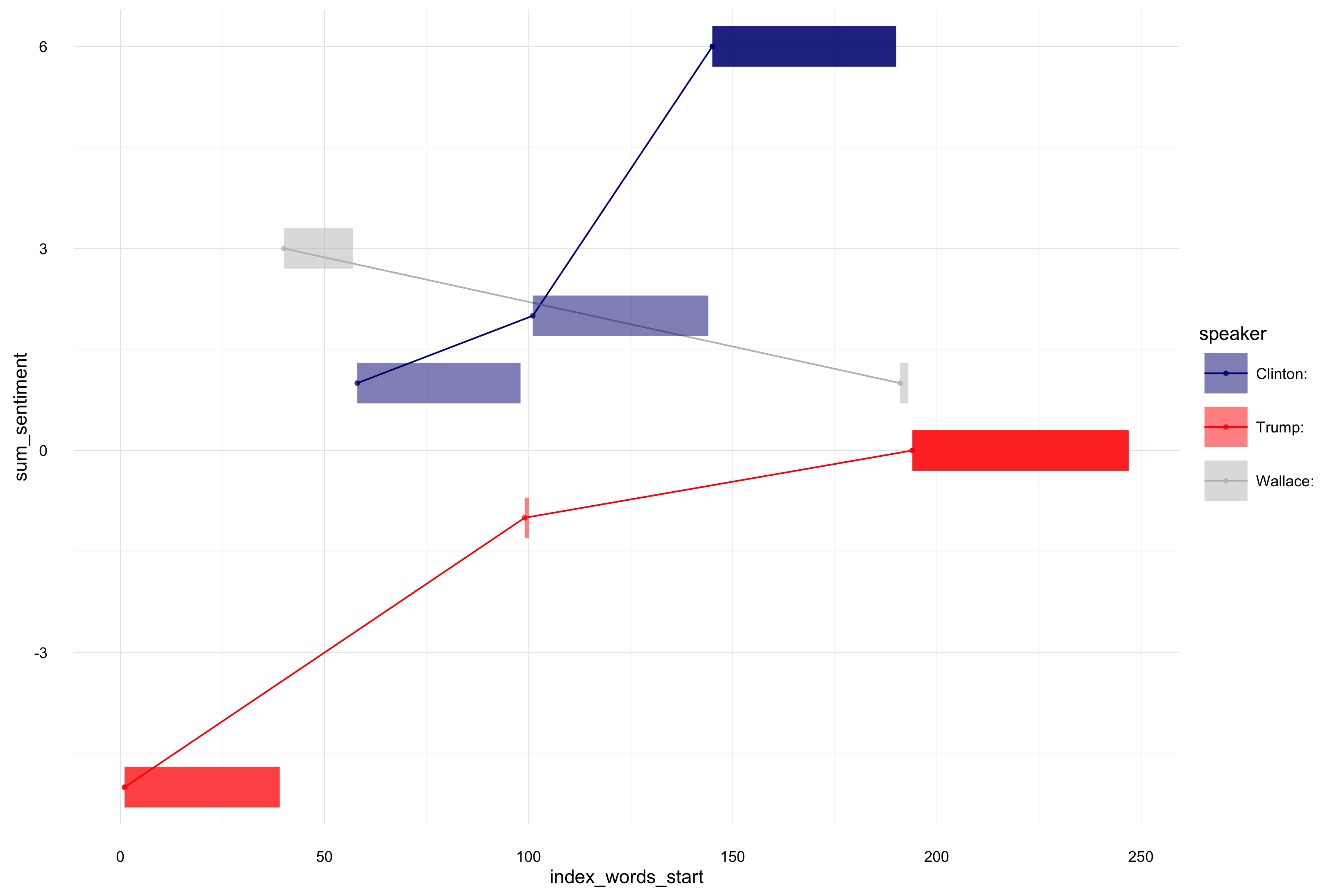

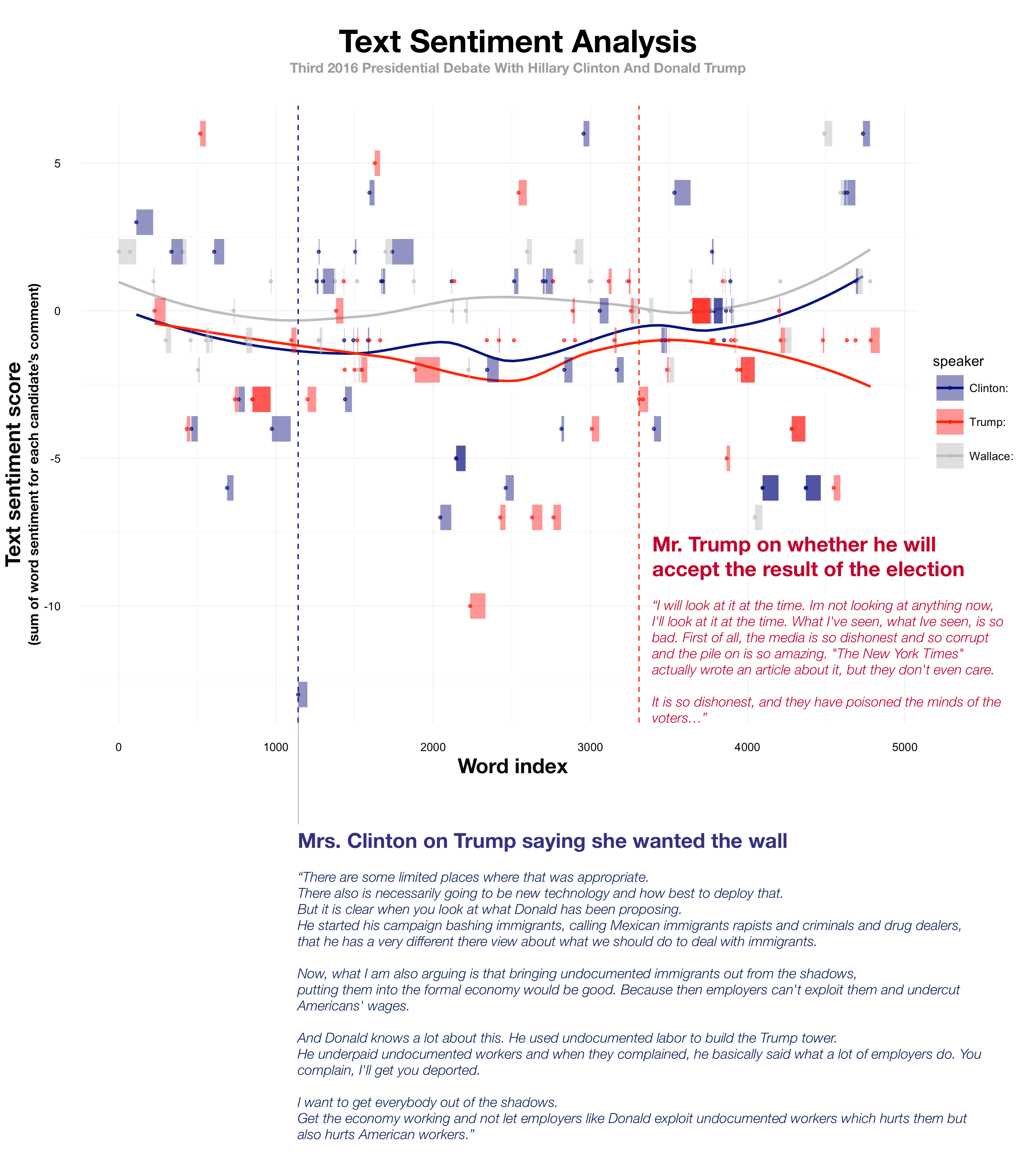

Text sentiment vs. facial sentiment

Like Professor Frank said, the facial recognition data interpretation depends a lot on the context, on what has been said. If we overlay a sentiment analysis of the last 5 minutes of the debate, we see that Hillary’s segments are positive, and so fits somewhat to her emotional state when her happiness level spikes around the 5,000 frames mark. Equally, Mr. Trump’s angriness level decreases in the final stage of the video we looked at, approaching the 7,500 mark.

(minute 4:15) Trump: We’re going to make America great. We have a depleted military. It has to be helped. It has to be fixed. We have the greatest people on Earth in our military. >

This is mirrored with his reasoning on why the US healthcare system needs to be fixed, and in his explanation there is no space for his sad or angry grimaces. It is more common for him to respond with these facial expressions when it was Mr. Clinton’s turn to speak (within the three segments in the middle of the video).

Hillary’s fake smile stands like a Mexican wall

Hillary Clinton was revealed to own a facial pattern that might divert from what she actually feels too. The software picked up on Clinton’s happy face, in moments when she isn’t told a joke. To understand her behaviour, we need to look closer at the context. For occasions when Mr. Trump insulted her, she used her happy face as a wall to show him that he can’t break her to release real emotions, and she won’t let him get under her skin. Frank explains that Hillary Clinton’s response of smiling is a careful attempt to avoid mistakes such as feeling unstable or forgetting her point she needs to make. Trump tried everything to pry out an honest emotional response. He hoped to unnerve her, but she stood strong.

Overall sentiment for the 3rd debate:

Lets have a final look at the overall sentiment data. Since we have a script, it isn’t hard to run it on the entire debate. We see that both Mr. Trump and Mrs. Clinton were playing on the negative side. The last 5 minutes we analysed their facial expressions on were relevant in my eyes because that is when their text sentiment delivered. Trump became more negative, Clinton more positive. And now we also know that her face was more happy than his at that time.

Wrapping up:

The Microsoft emotion API, might be far away from being perfect. However, applying this technology to new reporting styles, could offer to have a different discussion about speakers, that could lead to new conclusions.

Whether facial recognition technology will be a crucial part of every future presidential debate, Frank wouldn’t answer. He was clear on the results a facial recognition analysis could yield. A response can tell us something about a person. While for Mrs. Clinton, there is a lot of happiness attached in her attempt to guard herself, Trump chose as his debate stage identity an angry grimace.

Leave a Comment